I am currently downloading every episode of The British History Podcast. I wrote a small BASH script to do this:

function downloadFullPodcast(){

xhtml=$(wget -qO- $1)

regex='.*?\.mp3'

readarray links < <(grep -oP "$regex" <<<"$xhtml")

arrLen=${#links[@]}

i=0

tempStr=${links[i]}

#dmz1

while [ "$tempStr" != "" ]; do

regex='>.*<'

tempStr=$(grep -oP "$regex" <<<"$tempStr")

len=${#tempStr}

mp3url=${tempStr:1:len-2}

numDum=$(($arrLen-$i))

number=$(printf "%0*d" 4 $numDum)

wget -qO $number\.mp3 $mp3url

((i++))

tempStr=${links[i]}

done

}

downloadFullPodcast "https://feeds.feedburner.com/TheBritishHistoryPodcast"

This isn't exactly a novel idea, but I'm surprised at how few devs I come across that have any interest at all in using the command line languages or other scripting languages.

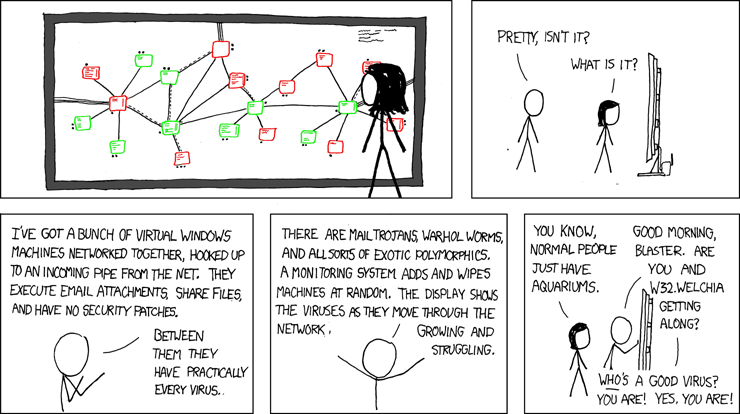

Even before I became a developer, I recognized how powerful and useful the command line could be. When I was an undergrad (with no coding experience whatsoever at the time), someone on a Linux forum helped me to write a BASH script that played a random episode of Scrubs in the Totem player, and I ran this with KAlarm in lieu of an alarm clock. (In fact, I might want to set that up again with a Raspberry Pi or something.)

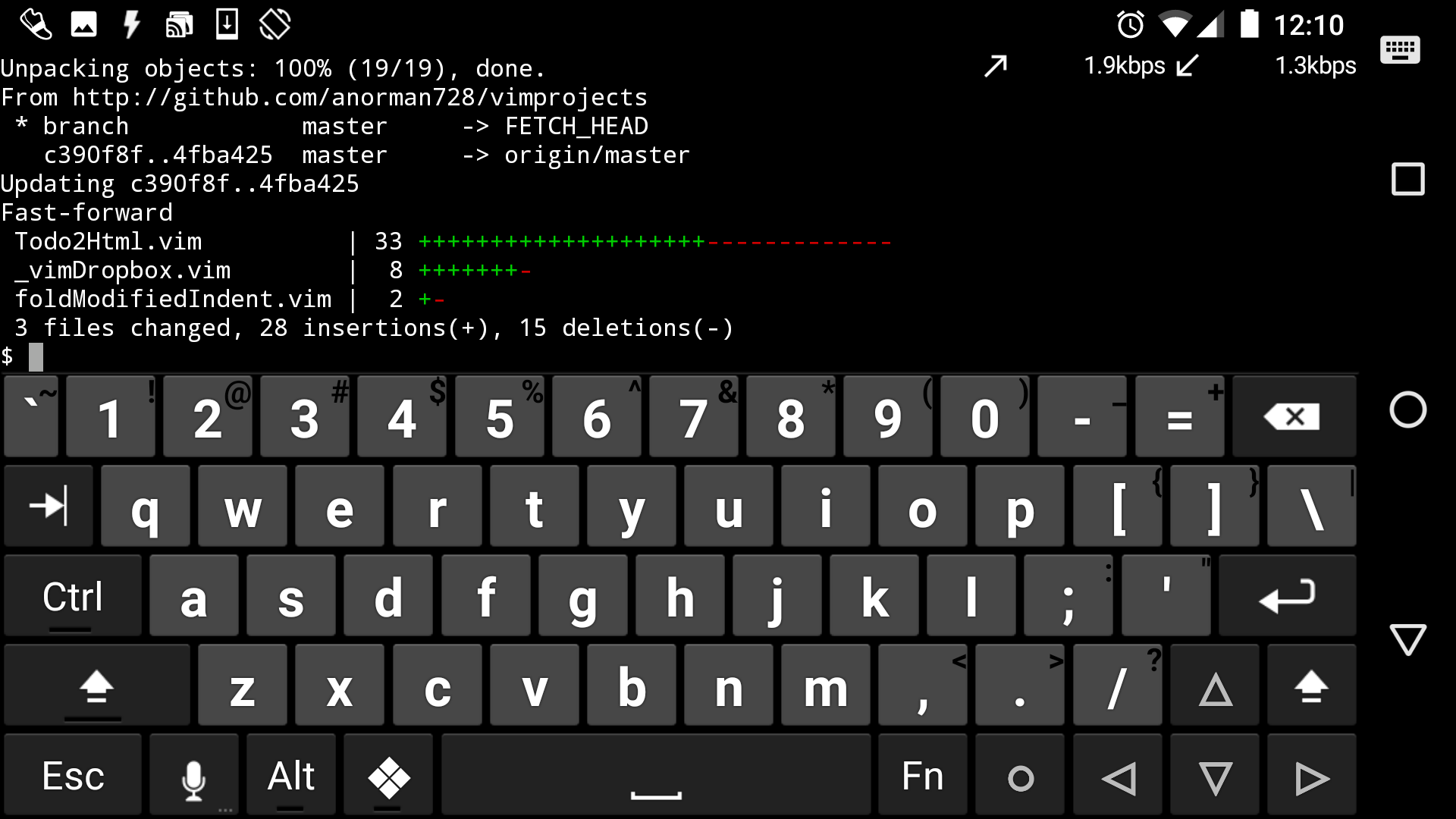

These days, I live in the command line, whether BASH at home or PowerShell at work. I couldn't go without it. My primary tools at work, apart from Firefox to test the code, of course, are PowerShell, Vim, and MySQL Monitor. All three of these are CLI tools. (Also, notably, all three of these are scriptable.) At home, it's the same, except for BASH instead of PowerShell (and having the PERL engine for the grep command is really nice).

My office's database is separated into almost 50 separate ports, so it's not uncommon for an inconsistency to appear. For example, one dev may add a column to one database and none of the others, then update the code in SVN to match. This causes mysqli to freak out when it can't find a column. It's not uncommon to see emails to the entire dev team saying "Could whoever is in charge of column XYZ add it to all levels?"

I guess the people sending out these emails must refuse to use the command line. I wrote a simple PowerShell function to run a command on all databases. With that function, it's a two-command process to run "SELECT table_name,column_type FROM information_schema.columns where column_name='XYZ'" across all databases to find what table to add the column to and what type to use, and then add the column to that particular table across all of the databases. This takes about 60 seconds, so I've never felt the need to send out a mass email to every dev to add a column. I just find what needs to be added and add it myself.

I won't go so far as to say something obnoxious like "You're an idiot if you're not using CLI tools," of course, but I do think that these tools offer advantages that a lot of people seem to miss. The most useful tools that I've built for myself have been PowerShell, BASH, and VimScript functions.

What I find is that having a strong grasp (or even a mediocre grasp) of scripting languages like command line languages can really help you to complete a lot of tasks that GUI tools just aren't designed to handle. This is because these tasks are too nuanced for the designers of the GUI tools to have anticipated. The example that I have above with the British History Podcast is a decent one. I did that because my podcatcher program on my phone is great for listening to the most recent podcast, but not so great for binge-listening archived podcasts. I decided to get all of the mp3s so that I could put them in my audiobook reader instead. This could be done in a better scripting language like Python or Ruby, of course, but that's just using different scripting language-- The process remains the same.

Tip 21 from The Pragmatic Programmer by Hunt and Thomas is "Use the Power of Command Shells." "Gain familiarity with the shell, and you'll find your productivity soaring," they say. I would extend that to other scripting languages, and I think Hunt and Thomas would, too, because, in the same chapter, they recommend using a text editor that's programmable. I say, if you're interested, give it a shot. You don't really need to buy a book to learn them (the one PowerShell book that I bought turned out to be a complete waste). Just read a few brief tutorials online, and then you can Google everything else you need to learn as you go. It's been really beneficial to me.